Forecasts should specify the timeframe

Disagreements which suggest profound differences of philosophy sometimes turn out to be merely a matter of timing: the parties don’t actually disagree about whether a thing will happen or not, they just disagree over how long it will take. For instance, timing is at the root of apparently fundamental differences of opinion about the technological singularity.

Elon Musk is renowned for his warnings about superintelligence:

“With artificial intelligence, we are summoning the demon. You know all those stories where there’s the guy with the pentagram and the holy water and he’s like, yeah, he’s sure he can control the demon? Doesn’t work out.” “We are the biological boot-loader for digital super-intelligence.”

Comments like this have attracted fierce criticism:

“I don’t work on not turning AI evil today for the same reason I don’t worry about the problem of overpopulation on the planet Mars.” (Andrew Ng)

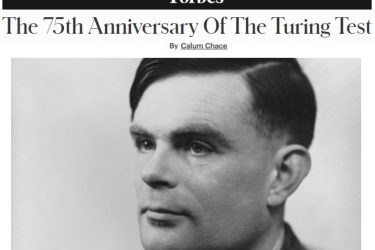

“We’re very far from having machines that can learn the most basic things about the world in the way humans and animals can do. Like, yes, in particular areas machines have superhuman performance, but in terms of general intelligence we’re not even close to a rat. This makes a lot of questions people are asking themselves premature.” (Yann LeCun)

“Superintelligence is beyond the foreseeable horizon.” (Oren Etzioni)

If you look closely, these people don’t disagree with Musk that superintelligence is possible – even likely, and that its arrival could be an existential threat for humans. What they disagree about is the likely timing, and the difference isn’t as great as you might think. Ng thinks “There could be a race of killer robots in the far future,” but he doesn’t specify when. LeCun seems to think it could happen this century: “if there were any risk of [an “AI apocalypse”], it wouldn’t be for another few decades in the future.” And Etzioni’s comment was based on a survey where most respondents set the minimum timeframe as a mere 25 years. As Stephen Hawking famously wrote, “If a superior alien civilisation sent us a message saying, ‘We’ll arrive in a few decades,’ would we just reply, ‘OK, call us when you get here—we’ll leave the lights on’? Probably not.”

Although it is less obvious, I suspect a similar misunderstanding is at play in discussions about the other singularity – the economic one: the possibility of technological unemployment and what comes next. Martin Ford is one of the people warning us that we may face a jobless future:

“A lot of people assume automation is only going to affect blue-collar people, and that so long as you go to university you will be immune to that … But that’s not true, there will be a much broader impact.”

The opposing camp includes most of the people running the tech giants:

“People keep saying, what happens to jobs in the era of automation? I think there will be more jobs, not fewer.” “… your future is you with a computer, not you replaced by a computer…” “[I am] a job elimination denier.” – Eric Schmidt

“There are many things AI will never be able to do… When there is a lot of artificial intelligence, real intelligence will be scarce, real empathy will be scarce, real common sense will be scarce. So, we can have new jobs that are actually predicated on those attributes.” – Satya Nadella

For perfectly good reasons, these people mainly think in time horizons of up to five years, maybe ten at a stretch. And in that time period they are surely right to say that technological unemployment is unlikely. For machines to throw us out of a job, they have to be able to do it cheaper, better, and / or faster. Automation has been doing that for centuries: elevator operator and secretary are very niche occupations these days. When a job is automated, the employer’s process becomes more efficient. This creates wealth, and wealth creates demand, and thus new jobs. This will continue to happen – unless and until the day arrives when the machines can do almost all the work that we do for money.

If and when that day arrives, any new jobs which are created as old jobs are destroyed will be taken by machines, not humans. And our most important task as a species at that point will be to figure out a happy ending to that particular story.

Will that day arrive, and if so, when? People often say that Moore’s Law is dead or dying, but it isn’t true. It has been evolving ever since Gordon Moore noticed, back in 1965, that his company was putting twice as many transistors on each chip every year. (In 1975 he adjusted the time to two years, and shortly afterwards it was adjusted again, to eighteen months.) The cramming of transistors has slowed recently, but we are seeing an explosion of new types of chips, and Chris Bishop, the head of Microsoft Research in the UK, argues that we are seeing the start of a Moore’s Law for software: “I think we’re seeing … a similar, singular moment in the history of software … The rate limiting step now is … the data, and what’s really interesting is the amount of data in the world is – guess what – it’s growing exponentially! And that’s set to continue for a long, long time to come.”

So there is plenty more Moore, and plenty more exponential growth. The machines we have in 10 years time will be 128 times more powerful than the ones we have today. In 20 years time they will be 8,000 times more powerful, and in 30 years time, a million times more powerful. If you take the prospect of exponential growth seriously, and you look far enough ahead, it becomes hard to deny the possibility that machines will do pretty much all the things we do for money cheaper, better and faster than us.

So I would like to propose a new rule, and with no superfluous humility I’m calling it Calum’s Rule:

“Forecasts should specify the time frame.”

If we all follow this injunction, I suspect we will disagree much less, and we can start to address the issue more constructively.