Amid all the talk of robots and artificial intelligence stealing our jobs, there is one industry that is benefiting mightily from the dramatic improvements in AI: the AI ethics industry. Members of the AI ethics community are very active on Twitter and the blogosphere, and they congregate in real life at conferences in places like Dubai and Puerto Rico. Their task is important: they want to make the world a better place, and there is a pretty good chance that they will succeed, at least in part. But have they chosen the wrong name for their field?

Artificial intelligence is a technology, and a very powerful one, like nuclear fission. It will become increasingly pervasive, like electricity. Some say that its arrival may even turn out to be as significant as the discovery of fire. Like nuclear fission, electricity, and fire, AI can have positive impacts and negative impacts, and given how powerful it is and it will become, it is vital that we figure out how to promote the positive outcomes and avoid the negative outcomes.

This is what concerns people in the AI ethics community. They want to minimise the amount of bias in the data which informs the decisions that AI systems help us to make – and ideally, to eliminate the bias altogether. They want to ensure that tech giants and governments respect our privacy at the same time as they develop and deliver compelling products and services. They want the people who deploy AI to make their systems as transparent as possible, so that in advance or in retrospect, we can check for sources of bias, and other forms of harm.

But if AI is a technology like fire or electricity, why is the field called “AI ethics”? We don’t have “fire ethics” or “electricity ethics”, so why should we have AI ethics? There may be a terminological confusion here, and it could have negative consequences.

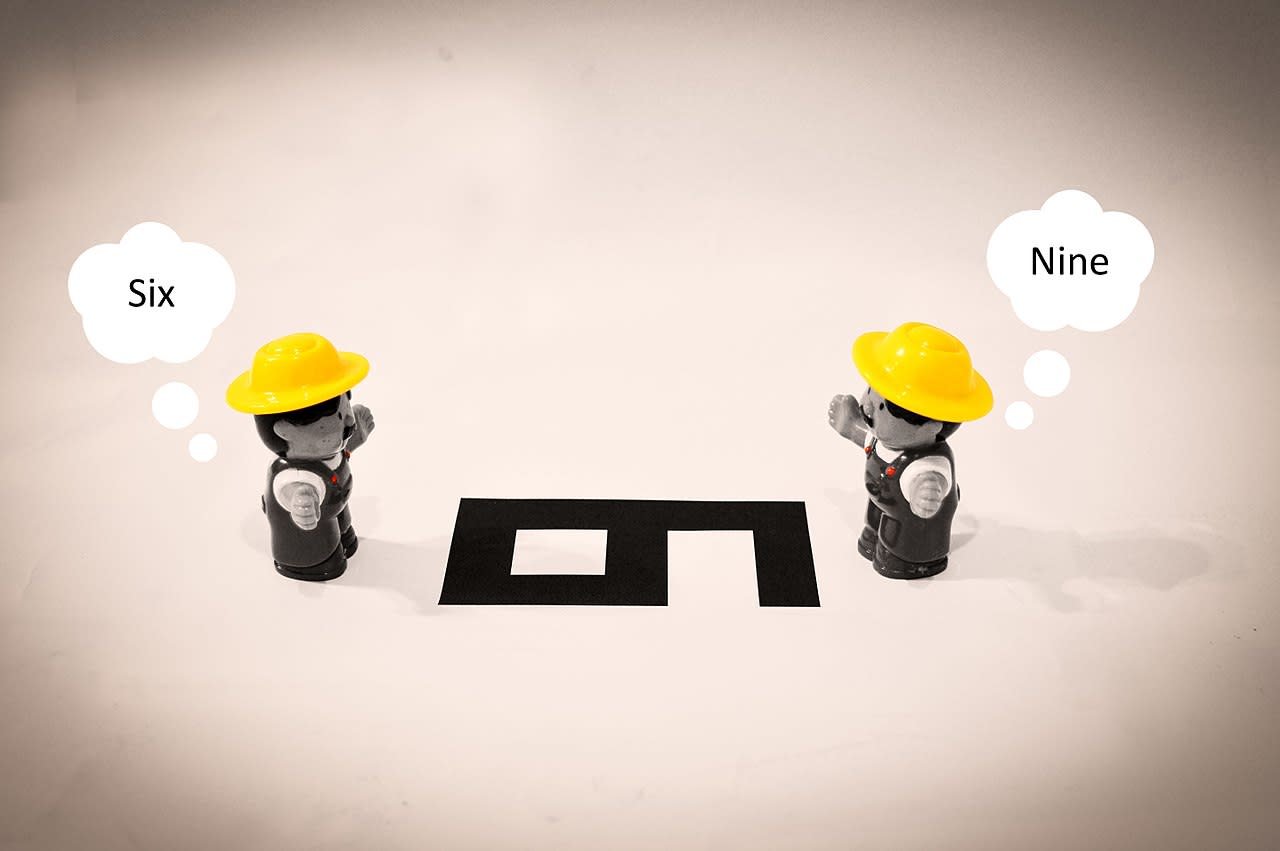

One possible downside is that people outside the field may get the impression that some sort of moral agency is being attributed to the AI, rather than to the humans who develop AI systems. The AI we have today is narrow AI: superhuman in certain narrow domains, like playing chess and Go, but useless at anything else. It makes no more sense to attribute moral agency to these systems than it does to a car, or a rock. It will probably be many years before we create an AI which can reasonably be described as a moral agent.

It is ironic that people who regard themselves as AI ethicists are falling into this trap, because many of them get very heated when robots are anthorpomorphised, as when the humanoid Sophia was given citizenship by Saudi Arabia.

There is a more serious potential downside to the nomenclature. People are going to disagree about the best way to obtain the benefits of AI and minimise or eliminate its harms. That is the way it should be: science, and indeed most types of human endeavour, advance by the robust exchange of views. People and groups will have different ideas about what promotes benefit and minimises harm. These ideas should be challenged and tested against each other. But if you think your field is about ethics rather than about what is most effective, there is a danger that you start to see anyone who disagrees with you as not just mistaken, but actually morally bad. You are in danger of feeling righteous, and unwilling or unable to listen to people who take a different view. You are likely to seek the company of like-minded people, and to fear and despise the people who disagree with you. This is again ironic, as AI ethicists are generally (and rightly) keen on diversity.

The issues explored in the field of AI ethics are important, but it would help to clarify them if some of the heat was taken out of the discussion. It might help if instead of talking about AI ethics, we talked about beneficial AI, and AI safety. When an engineer designs a bridge she does not finish the design and then consider how to stop it falling down. The ability to remain standing in all foreseeable circumstances is part of the design criteria, not a separate discipline called “bridge ethics”. Likewise, if an AI system has deleterious effects it is simply a badly designed AI system.

The issues explored in the field of AI ethics are important, but it would help to clarify them if some of the heat was taken out of the discussion. It might help if instead of talking about AI ethics, we talked about beneficial AI, and AI safety. When an engineer designs a bridge she does not finish the design and then consider how to stop it falling down. The ability to remain standing in all foreseeable circumstances is part of the design criteria, not a separate discipline called “bridge ethics”. Likewise, if an AI system has deleterious effects it is simply a badly designed AI system.

Interestingly, this change has already happened in the field of AGI research, the study of whether and how to create artificial general intelligence, and how to avoid the potential downsides of that development, if and when it does happen. Here, researchers talk about AI safety. Why not make the same move in the field of shorter-term AI challenges?

This article first appeared in Forbes magazine on 7th March 2019