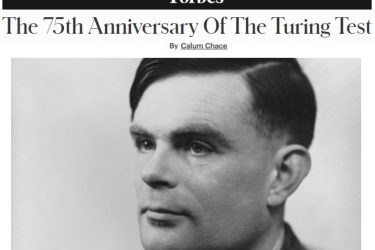

Stephen Hawking has joined the small group of people sounding the alarm about the possibility of super-intelligence arriving in the next few decades. In a blog in the Huffington Post, he and three American professors at MIT and Berkeley warn that it “would be a mistake, and potentially our worst mistake ever … to dismiss the notion of highly intelligent machines as mere science fiction.”

Stephen Hawking has joined the small group of people sounding the alarm about the possibility of super-intelligence arriving in the next few decades. In a blog in the Huffington Post, he and three American professors at MIT and Berkeley warn that it “would be a mistake, and potentially our worst mistake ever … to dismiss the notion of highly intelligent machines as mere science fiction.”

“Artificial intelligence (AI) research is now progressing rapidly,” the post continues, arguing that Watson’s Jeopardy success and self-driving cars ” will probably pale against what the coming decades will bring. … Success in creating AI would be the biggest event in human history … The short-term impact of AI depends on who controls it, the long-term impact depends on whether it can be controlled at all.”

Hawking and his colleagues use the analogy of alien contact to illustrate the gravity of the problem:”So, facing possible futures of incalculable benefits and risks, the experts are surely doing everything possible to ensure the best outcome, right? Wrong. If a superior alien civilization sent us a text message saying, “We’ll arrive in a few decades,” would we just reply, “OK, call us when you get here — we’ll leave the lights on”? Probably not, but this is more or less what is happening with AI. Although we are facing potentially the best or worst thing ever to happen to humanity, little serious research is devoted to these issues outside small non-profit institutes such as the Cambridge Center for Existential Risk, the Future of Humanity Institute, the Machine Intelligence Research Institute, and the Future of Life Institute. All of us — not only scientists, industrialists and generals — should ask ourselves what can we do now to improve the chances of reaping the benefits and avoiding the risks.”