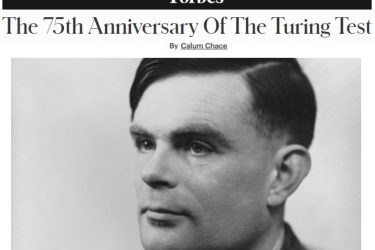

A couple of years ago Stephen Hawking told us that (general) AI is coming, and it will either be the best or the worst thing to happen to humanity. His comments owed a lot to Nick Bostrom’s seminal book “Superintelligence” and also to Professor Stuart Russell. They kicked off a series of remarks by the Three Wise Men (Hawking, Musk and Gates), which collectively did so much to alert thinking people everywhere to the dramatic progress of AI. They were important comments, and IMHO they were r

Journalists are busy people, good news is no news, and if it bleeds it leads. For a year or so the papers were full of pictures of the Terminator, and we had an epidemic of Droid Dread: the Great Robot Freak-Out of 2015.

The Terminator is an arresting image, and it’s good for getting people’s attention, but making people unreasonably scared of AI is unhelpful in the medium term. Among other things, it makes sensible AI resarchers cringe, and in turn they may under-state the risks inherent in AI. Or, like senior Googlers, it makes them go silent on the subject.

So when Professor Hawking repeated his remarks during the launch of the Leverhulme Centre for the Future of Intelligence in Cambridge this week (October 2016), there was a need for balanced comment. Here is my contribution, in an interview with Sky News.

*If you’re wondering whether this title owes anything to The Big Bang Theory, you’re right.