In a July 2015 interview with Edge, an online magazine, Pulitzer Prize-winning veteran New York Times journalist John Markoff articulated a widespread idea when he lamented the deceleration of technological progress. In fact he claimed that it has come to a halt.i He reported that Moore’s Law stopped reducing the price of computer components in 2013, and pointed to the disappointing performance of the robots entered into the DARPA Robotics Challenge in June 2015 (which we reviewed in chapter 2.3).

He claimed that there has been no profound technological innovation since the invention of the smartphone in 2007, and complained that basic science research has essentially died, with no modern equivalent of Xerox’s Palo Alto Research Centre (PARC), which was responsible for many of the fundamental features of computers which we take for granted today, like graphical user interfaces (GUIs) and indeed the PC.

Markoff grew up in Silicon Valley and began writing about the internet in the 1970s. He fears that the spirit of innovation and enterprise has gone out of the place, and bemoans the absence of technologists or entrepreneurs today with the stature of past greats like Doug Engelbart (inventor of the computer mouse and much more), Bill Gates and Steve Jobs. He argues that today’s entrepreneurs are mere copycats, trying to peddle the next “Uber for X”.

He admits that the pace of technological development might pick up again, perhaps thanks to research into meta-materials, whose structure absorbs, bends or enhances electromagnetic waves in exotic ways. He is dismissive of artificial intelligence because it has not yet produced a conscious mind, but he thinks that augmented reality might turn out to be a new platform for innovation, just as the smartphone did a decade ago. But in conclusion he believes that “2045… is going to look more like it looks today than you think.”

It is tempting to think that Markoff was to some extent playing to the gallery, wallowing self-indulgently in sexagenarian nostalgia about the passing of old glories. His critique blithely ignores the arrival of deep learning, social media and much else, and dismisses the basic research that goes on at the tech giants and at universities around the world.

Nevertheless, Markoff does articulate a fairly widespread point of view. Many people believe that the industrial revolution had a far greater impact on everyday life than anything produced by the information revolution. Before the arrival of railroads and then cars, most people never travelled outside their town or village, much less to a foreign country. Before the arrival of electricity and central heating, human activity was governed by the sun: even if you were privileged enough to be able to read, it was expensive and tedious to do so by candlelight, and everything slowed down during the cold of the winter months.

But it is facile to ignore the revolutions brought about by the information age. Television and the internet have shown us how people live all around the world, and thanks to Google and Wikipedia, etc., we now have something close to omniscience. We have machines which rival us in their ability to read, recognise images, and process natural language. And the thing to remember is that the information revolution is very young. What is coming will make the industrial revolution, profound as it was, seem pale by comparison.

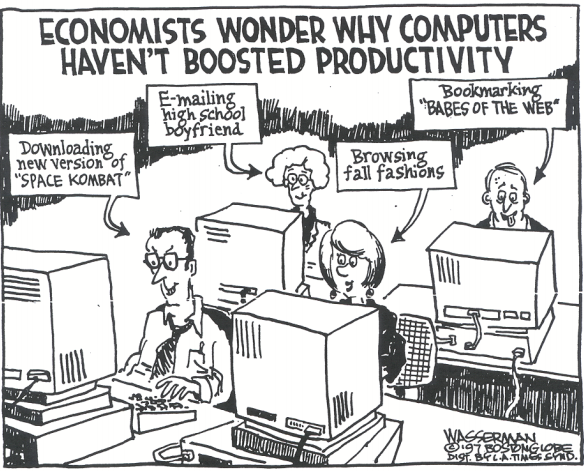

Part of the difficulty here is that there is a serious problem with economists’ measurement of productivity. The Nobel laureate economist Robert Solow famously remarked in 1987 that “you can see the computer age everywhere but in the productivity statistics.” Economists complain that productivity has stagnated in recent decades. Another eminent economist, Robert Gordon, argues in his 2016 book “The Rise and Fall of American Growth” that productivity growth was high between 1920 and 1970 and nothing much has happened since then.

Anyone who was alive in the 1970s knows this is nonsense. Back then, cars broke down all the time and were also unsafe. Television was still often black and white, it was broadcast on a tiny number of channels, and it was shut down completely for many hours a day. Foreign travel was rare and very expensive. And we didn’t have the omniscience of the internet. Much of the dramatic improvements that have improved this pretty appalling state of affairs is simply not captured in the productivity or GDP statistics.

Measuring these things has always been a problem. A divorce lawyer deliberately aggravating the animosity between her clients because it will boost her fees is contributing to GDP because she gets paid, but she is only detracting from the sum of human happiness. The Encyclopedia Britannica contributed to GDP but Wikipedia does not. The computer you use today probably costs around the same as the one you used a decade ago, and thus contributes the same to GDP, even though today’s version is a marvel compared to the older one. It seems that the improvement in human life is becoming increasingly divorced from the things that economists can measure. It may well be that automation will deepen and accelerate this phenomenon.

The particulars of the future are always unknown, and all predictions are perilous. But the idea that the world will be largely unchanged three decades hence is little short of preposterous.