Last week I posted an article called “What’s wrong with UBI?” It argued that two of the three component parts of UBI are unhelpful: its universality and its basic-ness. The article was viewed 100,000 times on LinkedIn and provoked 430-odd comments. This is too many to respond to individually, so this follow-up article is the best I can offer by way of response. Sorry about that.

Fortunately, the responses cluster into five themes, which makes a collective response possible. They mostly said this:

Expanding a little, they said this:

- You’re an idiot because UBI is communism and we know that doesn’t work

- You’re a callous sonofabitch because UBI is a sane and fair response to an unjust world. Oh, and you’re an idiot too

- You’re an idiot because we know that automation has never caused lasting unemployment, so it won’t in the future

- That was interesting, thank you

- Assorted random bat-shit craziness

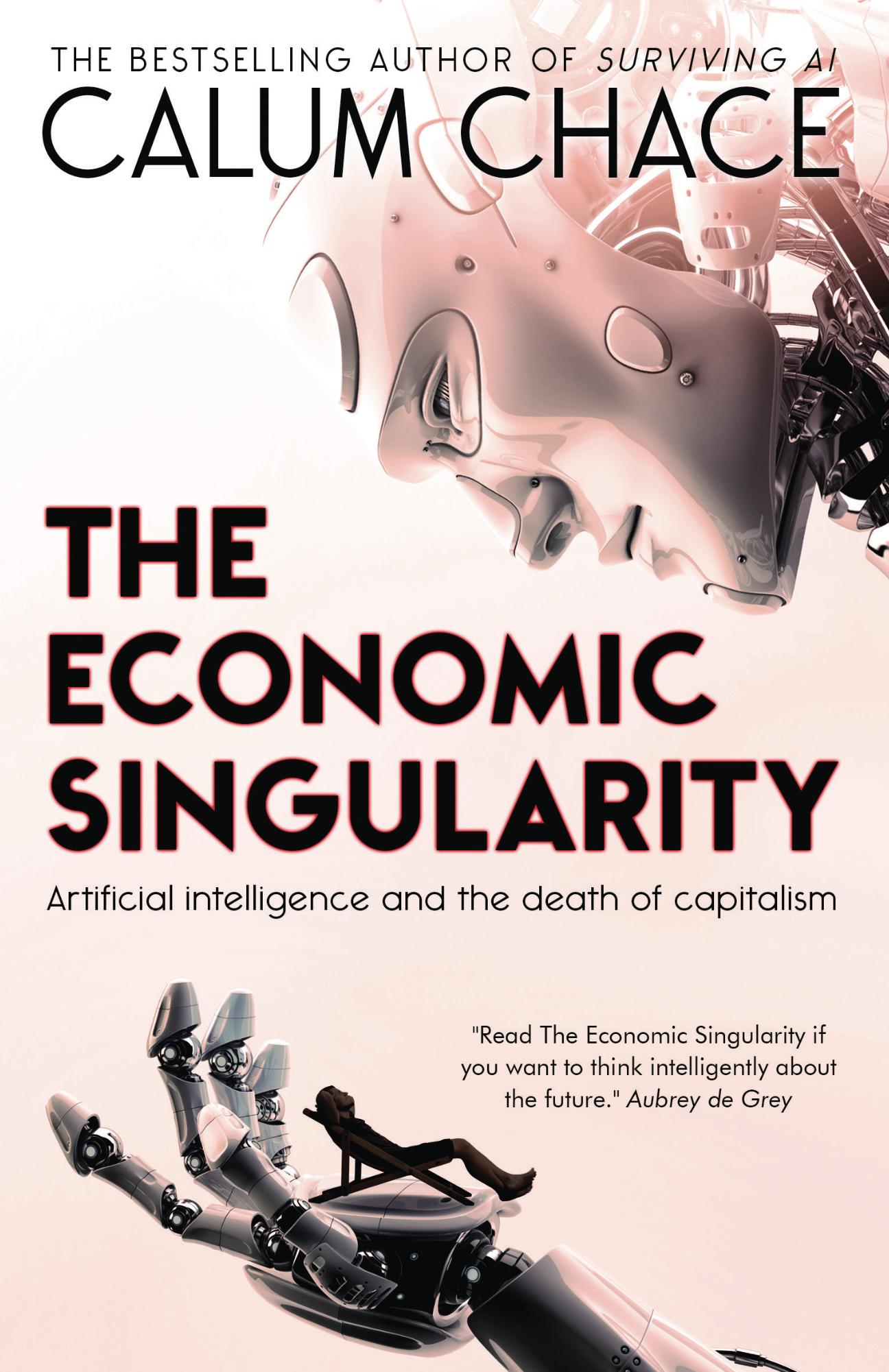

Clearly UBI provokes strong feelings, which I think is a good thing. For today’s economy, UBI is a pretty terrible prescription, and isn’t making political headway outside the fringes. But it does seem to many smart people (eg Elon Musk) to be a sensible option for tackling the economic singularity, which I explored in detail in my book, called, unsurprisingly, The Economic Singularity.

It is tempting to believe that since my article annoyed both ends of the political spectrum, I must be onto something. But of course that is false logic: traffic jams annoy pretty much everyone, which doesn’t mean they have any merit.

Anyway, here are some brief responses to the objections.

1. You’re an idiot because UBI is communism and we know that doesn’t work

Before becoming a full-time writer and speaker about AI, I spent 30 years in business. I firmly believe that capitalism (plus the scientific method) have made today the best time ever to be human. Previously, life was nasty, brutal and short. Now it isn’t, for most people. In other words, I am not a communist.

Communism is the public ownership of the means of production, distribution and exchange, and UBI does not require that. It does, however, require sharply increased taxation, and this can damage enterprise – unless goods and services can be made far cheaper. I wrote about that in a post called The Star Trek Economy ().

2. You’re a callous sonofabitch because UBI is a sane and fair response to an unjust world. Oh, and you’re an idiot too

I recently heard a leading proponent of UBI respond to the question “How can we afford it?” with “How can we afford not to have it?” He seemed genuinely to think that was an adequate answer. Wow.

However, it is obvious that if technological automation renders half or more of the population unemployable, then we will need to find a way to give those unemployable people an income. In other words, we will have to de-couple income from jobs. I semi-seriously suggested an alternative to UBI called Progressive Comfortable Income, or PCI, because I see no sense in making payments to the initially large number of people who don’t need it because they are still working, and I don’t believe all the unemployed people will or should be content to live in penury: we want to live in comfort.

A lot of the respondents to my article argued that payments to the wealthy would be recovered in tax. But unless you’re going to set the marginal tax rate at 100% you will only recover part of the payment. You are also engaging in a pointless bureaucratic merry-go-round of payments.

3. You’re an idiot because we know that automation has never caused lasting unemployment, so it won’t in the future

The most pernicious response, I think, is the claim that automation cannot cause lasting unemployment – because it has not done so in the past. This is not just poor reasoning (past economic performance is no guarantee of future outcome); it is dangerous. It is also the view, as far as I can see, held by most mainstream economists today. It is the Reverse Luddite Fallacy*.

In the past, automation has mostly been mechanisation – the replacement of muscle power. The mechanisation of farming reduced human employment in agriculture from 80% of the labour force in 1800 to around 1% today. Humans went on to do other jobs, but horses did not, as they had no cognitive skills to offer. The impact on the horse population was catastrophic.

I suspect that economists either refuse or just fail to take Moore’s Law into account. This doubling process (which is not dying, just changing) means that machines in 2027 will be 128 times smarter than today’s, and machines in 2037 will be 8,000 times smarter.

I’ll say that again. If Moore’s law continues, then machines in 2037 will be 8,000 smarter than today.

It’s very likely that these machines will be cheaper, better and faster at most of the tasks that humans could do for money.

Because the machines won’t be conscious (artificial general intelligence, or AGI, is probably further away than that), that still leaves the central role for humans of doing all the worthwhile things in life: namely, learning, exploring, socialising, paying, having fun.

That is surely the wonderful world we should be working towards, but there is no reason to think we will arrive there inevitably. Things could go wrong. It is no small task to work out what an economy looks like where income is de-coupled from jobs, and how to get there from here. Just waving a magic wand and saying “UBI will fix it” is not sufficient.

* Thanks to my friends at the Singularity Bros podcast for inventing this handy term.